Beyond the Face: What 'Deepfake' Technology Is Really Stealing from Us (and Why Your PhD Matters More Than Ever)

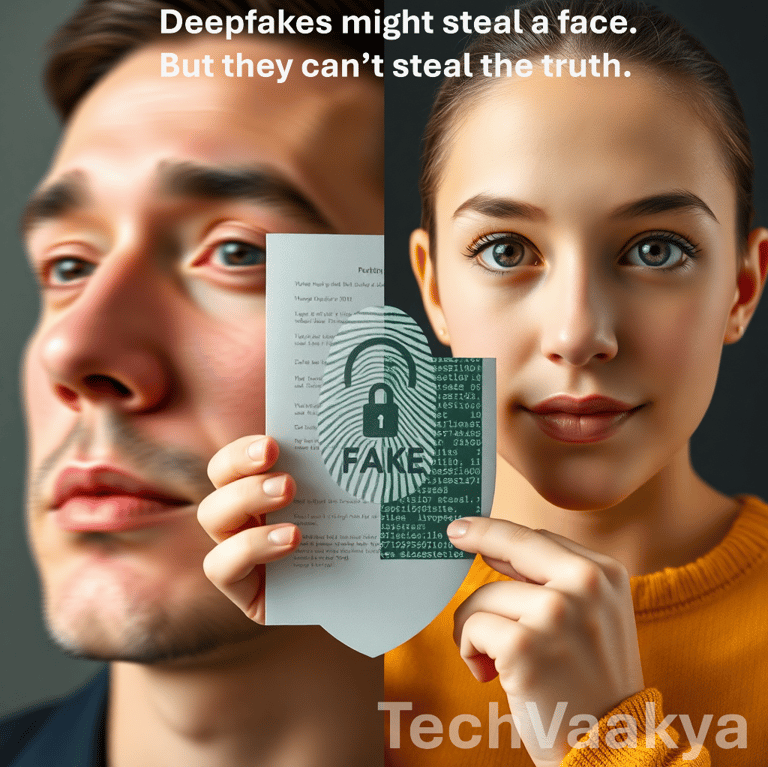

In a world where anyone's face or voice can be synthetically generated, the rise of deepfake technology is no longer just a novelty or political threat. It's a full-scale assault on truth itself. This blog post unpacks the hidden danger behind deepfakes: not just the manipulation of celebrity images, but the erosion of trust in real experts, researchers, and scientific knowledge. We explore why the real frontline of this battle is academia and how PhD researchers, often overlooked, are uniquely equipped to defend digital reality. From ethical frameworks to data provenance, you'll learn why your PhD is more than a degree—it's a license to protect intellectual integrity in the age of generative AI. This is a call to arms for researchers, educators, and digital citizens alike. Would you take the Digital Oath?

The Celebrity Deepfake Nobody Noticed

Imagine this: a video goes viral.

In it, a world-renowned climate scientist—known for their calm, deliberate tone—delivers an explosive monologue. They're animated, emotional, and strangely off-brand. The speech is peppered with alarmist statistics and unverified claims. It spreads like wildfire.

News outlets pick it up. Politicians react. People start questioning climate science itself. But here's the twist:

It wasn't real.

The scientist never said those things. The video was a deepfake—so precise, so convincing, that even the scientist's own family had to watch it twice. And in that fleeting moment of doubt, something far more dangerous than digital manipulation occurred:

We lost trust.